Abstract

Response functions are a fundamental aspect of physics; they represent the link between experimental observations and the underlying quantum many-body state. However, this link is often under-appreciated, as the Lehmann formalism for obtaining response functions in linear response has no direct link to experiment. Within the context of quantum computing, and via a linear response framework, we restore this link by making the experiment an inextricable part of the quantum simulation. This method can be frequency- and momentum-selective, avoids limitations on operators that can be directly measured, and can be more efficient than competing methods. As prototypical examples of response functions, we demonstrate that both bosonic and fermionic Green’s functions can be obtained, and apply these ideas to the study of a charge-density-wave material on the ibm_auckland superconducting quantum computer. The linear response method provides a robust framework for using quantum computers to study systems in physics and chemistry.

Similar content being viewed by others

Introduction

Quantum computers are showing promise as quantum simulators of many-body physics, with the hope of being able to further our understanding of complex interacting systems. In order to realize this promise, a key task is to compute response functions for a prepared many-body state. They represent the experimental measurements that are performed on the physical realizations of such systems, and computing them via simulation is a critical step in connecting to experiments and building an understanding of the physics they contain. Examples of experiments that measure response functions are neutron scattering, optical spectroscopy, and angle-resolved photoemission spectroscopy (ARPES), which measure the spin-spin correlation, current-current correlation, and single-particle Green’s function, respectively1,2. The first two are bosonic correlation functions, while the latter is a fermionic correlation function. Both of these contain valuable information — both have direct links to experiments, and in addition the electronic Green’s function is a key ingredient in hybrid-classical algorithms such as dynamical mean field theory3,4,5,6,7,8.

In all cases, correlation functions involve expectation values of the form \(\langle {{{{{{{\bf{A}}}}}}}}(t){{{{{{{\bf{B}}}}}}}}(t^{\prime} )\rangle\); the particulars of A and B are set by the experiment or desired quantity. For example, in many condensed matter scattering experiments both A and B have definite momentum, i.e. a sum of local operators; in contrast, scanning probe microscopy makes use of purely local operators. This means that there is significant variation in A and B, and in turn the need for a comparable freedom in evaluating these on the quantum computer.

There are several existing techniques for computing correlation functions on quantum computers. Primary among these are methods based on the Hadamard test circuit, which rely on time evolution of a given state9,10,11,12,13,14,15,16; other methods (most of which rely on the Lehmann formalism1,17) include variational approaches18,19,20,21,22, spectral decomposition23,24,25, and linear systems of equation solvers26. Each of these has their own advantages and disadvantages, based on the particular quantum algorithms and hardware at hand. Moreover, extending beyond simple local unitary A and B comes with the cost of additional resources and increased error in the calculations.

In this work, we outline a method for calculating correlation functions based on a linear response framework that is in direct correspondence to experiments, as schematically illustrated in Fig. 1. The quantum state is driven with an applied field B with specific temporal and spatial structure, and the response of the system to that field A is measured as a function of space and time. The proportionality between the field and the response then yield the desired correlation function(s).

We establish an equivalence between the experimental measurement of a response function and an ancilla-free quantum simulation under a time dependent Hamiltonian that includes the perturbative excitation h(t)B. Following excitation, the system is evolved under \({{{{{{{{\mathcal{H}}}}}}}}}_{0}\), and A is measured. The functional derivative of A(t) = 〈A(t)〉 with respect to \(h({t}^{{\prime} })\) yields the retarded response function shown in the figure. The data shown is taken from Fig. 3.

The linear response framework enables using generic operators A and B (such as linear combinations) in the correlation function within a single quantum circuit, which has several downstream advantages. (i) Many correlation functions may be obtained at the same time, with one quantum circuit. (ii) Relying on a single quantum circuit avoids compounding errors in post-processing. (iii) Tailored excitations, e.g. those that focus on a particular energy or momentum range of the correlation function can be made. (iv) No ancilla qubits are needed. While more complex operators are also possible within the competing frameworks such as the Hadamard test, as it will be mentioned further, it comes at a significantly large additional cost.

We demonstrate the power of the linear response framework by applying it to the study of a two model systems. The first is a prototypical charge density wave system — the Su–Schrieffer–Heeger model27. We use the two fermionic methods with a momentum-selective field to obtain the electronic spectrum as would be measured by ARPES on IBM quantum hardware, and on a noisy simulator to compare the linear-response method to the Hadamard-test method. We next use the bosonic method and frequency selectivity to obtain the density-density response function of the same model system, as would be measured by momentum-resolved electron energy loss spectroscopy (M-EELS). Finally, we demonstrate the use of the technique for interacting models and compute the spectrum (retarded Green’s function) of the 1D Hubbard model. These developments make significant inroads to being able to use near-term quantum computers in real-world applications.

This work also has impact on classical computing; the approach described below allows for one to compute response functions by simply running time evolution on a classical computer which avoids the need to compute vertex corrections by solving a Bethe–Salpeter-like equation, as needed with conventional approaches2. While our work has existing analogs for bosonic correlation functions28,29,30,31, to the best of our knowledge the fermionic correlation functions have not yet been explored in this realm. Our work here does not focus on this classical application, but it should be clear that the approach developed here can be directly applied more broadly.

Results

To set the stage for our discussion, we will briefly outline the details of the Hadamard test9 approach for obtaining correlation functions, which underpins several of the currently used methods10,11,12,13,14,15,16 on near term quantum hardware, and shares the most characteristics with the linear response framework. The other methods are either variational18,19,20,21,22, or are not amenable to near-term quantum hardware23,24,25.

Figure 2 illustrates the circuit structure used for the Hadamard test. To measure 〈ψ∣A(t)B∣ψ〉 with Hadamard test method, one needs to apply A and B in a controlled fashion, i.e. apply them only if the ancilla qubit is in \(\left\vert 1\right\rangle\) state. It is efficient to do so when A and B are unitary operators that are local in qubit space, but when they are not, further resources are needed.

As an example, consider a correlation function in which the operator A is a linear combination of n Pauli strings where n is the number of qubits, \({{{{{{{\bf{A}}}}}}}}={\sum }_{i=1}^{n}{\zeta }_{i}{X}_{i}\), and B is still local and unitary. One can calculate this correlation function within this framework in two ways. The first way is to run several circuits to obtain correlation functions 〈ψ∣Xi(t)B∣ψ〉, and post-process the results. Further, we show that this approach is not quantum hardware noise-robust, and generates significantly more error compared to our (second) method. In addition, the number of shots required for this method scales with the number of local operators in the operators. It is simply because to ensure an error threshold ϵ for a linear combination of n terms, one must set the error for each term to ϵ/n. This in the end leads to a number of shots \({N}_{{{{{{{{\rm{shot}}}}}}}}}={{{{{{{\mathcal{O}}}}}}}}({n}^{2}/{\epsilon }^{2})\) per term, scaling quadratically with the system size. The second way is to implement the Linear Combination of Unitaries (LCU)32, to apply A directly on the state. For the case where A is not unitary, the LCU becomes a probabilistic algorithm with a success rate that decreases exponentially with the system size n. This can be cured for the case where the operator is unitary by Oblivious Amplitude Amplification, but at the cost of significantly increased circuit depth33.

The correlation functions we consider are of the form \(\langle {{{{{{{\bf{A}}}}}}}}(t){{{{{{{\bf{B}}}}}}}}({t}^{{\prime} })\pm {{{{{{{\bf{B}}}}}}}}({t}^{{\prime} }){{{{{{{\bf{A}}}}}}}}(t)\rangle\) — the amplitude of the operator A at time t given that B acted on the system at time \({t}^{{\prime} }\). The operators can be chosen to be local in position or momentum space to obtain spatial information about the system. The amplitudes are substracted in the case of bosonic correlation functions, whereas they are added in the case of fermionic correlation functions. Both can be calculated via the linear response method that we present here. We will first describe the formalism for bosonic correlation functions and describe how to apply momentum and frequency selectivity. Then, we describe two different ways to apply the linear-response formalism to calculate fermionic correlation functions for Hamiltonians that conserve particle count parity (maintain even or odd numbers of electrons). In what follows, we assume the existence of a pure or mixed state of interest on the quantum computer, which was prepared for example via variational approaches or adiabatic state preparation. In addition, we assume access to a method to implement evolution under both time-independent and time-dependent Hamiltonians. There are many choices for both; the complexities of these methods and others are detailed elsewhere34.

Bosonic (commutator) correlation functions

The methodology employs the standard results from linear response in many-body physics (see e.g. refs. 2,17 and 1), as we develop below. We are interested in the expectation value of the operator A(t) measured in a prepared many-body state \(\left\vert {\psi }_{0}\right\rangle\) and time-evolved in the Hamiltonian plus the applied (Hermitian) field; h(t)B that is, \({{{{{{{\mathcal{H}}}}}}}}(t)={{{{{{{{\mathcal{H}}}}}}}}}_{0}+h(t){{{{{{{\bf{B}}}}}}}}\). Then, A(t) is given by

where U(t), in Eq. (2), is the time ordered exponential for time evolution with respect to the time-dependent Hamiltonian plus field, and ts is the starting time such that t > ts, and \(h({t}^{{\prime} })=0\) for all \({t}^{{\prime} } < \, {t}_{s}\). Expanding A(t) with respect to h(t), we find

Here, \({\chi }^{R}(t,{t}^{{\prime} })\) is defined to be the functional derivative of A(t) with respect to \(h({t}^{{\prime} })\). Using Eqs. (1) and (2), we obtain

where we have \({{{{{{{\bf{A}}}}}}}}(t):={e}^{it{{{{{{{{\mathcal{H}}}}}}}}}_{0}}{{{{{{{\bf{A}}}}}}}}{e}^{-it{{{{{{{{\mathcal{H}}}}}}}}}_{0}}\). The θ-function arises because in Eq. (2) the integration region on the time ordered exponents is limited to \(\bar{t}\) values that are smaller than t. Since \({{{{{{{{\mathcal{H}}}}}}}}}_{0}\) is time independent, the response function \({\chi }^{R}(t,{t}^{{\prime} })\) only depends on the time difference \(t-{t}^{{\prime} }\). Fourier transforming from time to frequency, and using the convolution theorem, yields

Thus, if the amplitude of the signal h(t) is chosen to be small enough, the higher-order terms can be neglected and the response function can be calculated as a simple ratio in the frequency basis. Precisely how small the amplitude should be can be found in SI, and will be mentioned in the following sections.

One might be interested in the response function centered in a specific frequency interval and want to improve the signal-to-noise ratio of the calculation. This is achieved by choosing the frequency support of h(t) to be most concentrated within the desired frequency interval.

Similarly, by choosing A and B as operators with definite momentum, we can directly calculate the response function in the momentum basis. For example, for creation of a magnon with momentum k we can pick \({{{{{{{\bf{B}}}}}}}}={\sum }_{r}{e}^{ikr}{X}_{r}+\,{{\mbox{H.C.}}}\,={\sum }_{r}2\cos (kr){X}_{r}\), where Xr is Pauli X matrix applied on the rth site. In general, B = ∑rζrσr will be a linear combination of non-commuting Pauli strings. In that case, the signal can be implemented via 1st order Trotter-Suzuki approximation

For further error analysis, see SI. We can use a similar form for A as we use for B, but since it is directly measured (rather than appearing in the time evolution), this can simply be achieved with multiple circuits. However, if \({{{{{{{{\mathcal{H}}}}}}}}}_{0}\) is translation invariant, a single circuit is sufficient to calculate the response function χR in momentum space for a given momentum value, because it satisfies

that is, it is diagonal in momentum. This property is purely a result of translational invariance: momentum is always conserved. and thus unless \(k={k}^{{\prime} }\), the amplitudes must be zero, leading to Eq. (7).

Momentum and frequency selectivity allow us to immediately focus the signal we obtain from the quantum computer into desired ranges of momentum or frequency. This frequency selectivity is not easily performed with the Hadamard test35,36. Moreover, implementing a momentum selective operator can only be achieved via costly circuit modifications such as LCU. To avoid this, other approaches require each real space pair (r1, r2) to be measured separately with independent circuits; these are then Fourier transformed to obtain a momentum response function. On a noisy device, errors from the different circuits measurements can lead to both structured and unstructured noise (see Supplementary Note 2), reducing the precision of the final result. In the following sections, we show that momentum selectivity in our approach significantly reduces noise in the measured signal.

In short, the procedure to obtain the correlation function 〈ψ0∣[A(t), B]∣ψ0〉 is as follows:

-

1.

Evolve \(\left\vert {\psi }_{0}\right\rangle\) with the perturbed Hamiltonian \({{{{{{{\mathcal{H}}}}}}}}(t)={{{{{{{{\mathcal{H}}}}}}}}}_{0}+h(t){{{{{{{\bf{B}}}}}}}}\) during the time where h(t) is finite. h(t) should be a small field in order to ensure the simulation is in the linear response regime.

-

2.

Continue to evolve with the unperturbed Hamiltonian \({{{{{{{\mathcal{H}}}}}}}}\). The maximum length of time needed is set by the desired minimum energy resolution.

-

3.

At each time of interest t, measure A(t) = 〈A(t)〉.

-

4.

Fourier transform A(t) to A(ω) and divide by h(ω) to obtain χ(ω), thus performing the (numerical) functional differentiation.

Fermionic (anti-commutator) correlation functions

The most important fermionic correlation function is the retarded electronic Green’s function given by

where ci and \({c}_{j}^{{{{\dagger}}} }\) are the fermionic annihilation and creation operators at r = ri and rj, respectively. Note that Eq. (8) is the correlation function with respect to a single many-body state \(\left\vert {\psi }_{0}\right\rangle\). For the Green’s function at T = 0 in standard many-body theory \(\left\vert {\psi }_{0}\right\rangle\) is the ground state. At finite temperatures the expectation value has to be additionally averaged over a thermal distribution of states, which can be achieved via classical averaging of eigenstates37,38,39 or by going over to a density matrix representation32,40,41,42,43,44,45. The formalism below is applicable for any of these cases.

The functional derivative method does not directly carry over, because it requires adding a Grassman number valued field, which cannot be easily realized in a numerical simulation. This has thus far limited the potential of ancilla-free methods to bosonic correlation functions only35,36. To overcome this, we introduce two complementary approaches. The first uses an auxiliary operator P which anti-commutes with B, while the second uses simple post-selection.

First, we discuss a method based on the use of an auxiliary operator. We consider the fermionic version of Eq. (4), and denote this by \(G(t,{t}^{{\prime} }):\)

In order to produce an anticommutator, we introduce an additional operator P which satisfies the following properties

-

1.

\({{{{{{{\bf{P}}}}}}}}\left\vert {\psi }_{0}\right\rangle=s\left\vert {\psi }_{0}\right\rangle\) with s ≠ 0.

-

2.

{B(t), P} = 0 for all times t.

-

3.

\([{{{{{{{{\mathcal{H}}}}}}}}}_{0},{{{{{{{\bf{P}}}}}}}}]=0\), or P has no time dependence.

With these properties, it is straightforward to show that

This is of the form of Eq. (4) with A(t) replaced by A(t)P(t); therefore, the bosonic linear response method can be directly used.

When \(G(t,{t}^{{\prime} })\) is the retarded electronic Green’s function Eq. (8), the assumptions are satisfied by the parity operator for Hamiltonians that preserve particle parity; this covers a vast class of Hamiltonians of interest in quantum chemistry, condensed matter physics and quantum field theory. If the Hamiltonian of interest conserves the parity of the electron number, then the parity operator P = Z1Z2. . . Zn satisfies second and third conditions, where we use the spin representation (obtained after Jordan-Wigner transformation) to represent the parity operator. The fermionic operators, ci and \({c}_{i}^{{{{\dagger}}} }\), in their spin representation, have a Jordan-Wigner string attached46; that is, they are composed of i − 1 consecutive Z operators followed by a X ± iY:

In this case both ci and \({c}_{i}^{{{{\dagger}}} }\) anticommute with the parity operator P = Z1Z2. . . Zn, which satisfies the second condition. With this, \(G(t,{t}^{{\prime} })\) can be obtained by measuring Eq. (10) upon replacing A with \({\tilde{X}}_{i}{{{{{{{\bf{P}}}}}}}}\) (and/or \({\tilde{Y}}_{i}{{{{{{{\bf{P}}}}}}}}\)) and B with \({\tilde{X}}_{j}\) (and/or \({\tilde{Y}}_{j}\)).

While the requirements on P may seem restrictive, particle number parity conserving Hamiltonians form a large class containing many systems of interest. First, it covers any particle number conserving system such as molecules and condensed matter systems such as Hubbard model; all terms of these Hamiltonians have equal number of creation and annihilation operators (\({c}_{i}^{{{{\dagger}}} }{c}_{j},\;\;{c}_{i}^{{{{\dagger}}} }{c}_{j}^{{{{\dagger}}} }{c}_{r}{c}_{s},\ldots \)). In addition to these systems, this method of auxiliary operator works for Hamiltonians that contain pair creation/annihilation terms such as \({c}_{i}{c}_{j},\;\;{c}_{i}^{{{{\dagger}}} }{c}_{j}^{{{{\dagger}}} }\). These clearly do not conserve particle number; however, these terms generate or destroy even number of particles, leading to the conservation of the particle number parity operator. Similar terms are present in the effective theories for superconductivity.

We can choose h(t) and B to have frequency and momentum selectivity in the same way as we did for bosonic correlation functions. Thus, we can directly calculate the fermionic Green’s function in momentum space,

by selecting A as a Fourier combination of \({\tilde{X}}_{i}{{{{{{{\bf{P}}}}}}}}\) (and/or \({\tilde{Y}}_{i}{{{{{{{\bf{P}}}}}}}}\)) with momentum k, and B as a Fourier combination of Xj (and/or Yj) with momentum \({k}^{{\prime} }\), and forming the appropriate linear combination to select the desired c/c† terms. Similarly, by choosing an appropriate frequency support for h(t), we can calculate GR in a desired frequency range.

We next turn to a post-selection method to obtain fermionic single-particle Green’s functions. When the desired anti-commutator is the single-particle Green’s function (Eq. (8)) for a particle number conserving Hamiltonian, i.e. \(\left\vert {\psi }_{0}\right\rangle\) is an N-particle wave function, a powerful alternate approach exists. A complete derivation is shown in the supplementary material; we outline the salient parts here. Let us specify our perturbing field as

Position or momentum selectivity can be imposed by the choice of αm. Starting from a wavefunction with N particles and evolving with \({{{{{{{{\mathcal{H}}}}}}}}}_{0}+h(t){{{{{{{\bf{B}}}}}}}}\), the system will be in a superposition of the N − 1, N, and N + 1 particle sectors to linear order in h(t). For clarity, let us choose h(t) = ηδ(t) where η ≪ 1 and δ(t) is a Dirac delta pulse. This choice is not necessary, we can choose h(t) more generally to achieve frequency selectivity. In order to measure the Green’s function we apply a rotation about y (or x) to enable measurement of \({c}_{1}\pm {c}_{1}^{{{{\dagger}}} }\) on the first qubit, which generates N − 2 and N + 2 particle states as well. Denoting \(\left\vert {\Phi }_{M}^{y}\right\rangle\) (or \(\left\vert {\Phi }_{M}^{x}\right\rangle\)) as the M particle component of this final state, the state right before the measurement with y rotation is

and with y ↔ x for the x rotation case. The \(\left\vert {\Phi }_{M}^{x(y)}\right\rangle\) components of the final state are not normalized, and in fact their norms give the probability to observe M particles. These components can be separated via post selection, and quantities such as \(\langle {\Phi }_{M}^{y}| {\Phi }_{M}^{y}\rangle\) and \(\langle {\Phi }_{M}^{y}| {c}_{i}^{{{{\dagger}}} }{c}_{i}| {\Phi }_{M}^{y}\rangle\) can be measured within the M particle sectors. In Supplementary Note 3, we show that the following linear combinations of those quantities yield the desired Green’s functions:

where the fermionic Green’s functions are1,

While this is limited to particle-conserving Hamiltonians, this is a relatively mild restriction as all fermionic Hamiltonians that do not have superconducting terms (pair-creation and pair-annihilation) satisfy this restriction.

Error analysis and scalability

In Supplementary Note 6, we analyze three different sources of error for a time local signal h(t) = ηδ(t) in detail: non-linear excitations, Trotter error for the driving field eiηB, and the statistical error coming from measurement. To ensure that the non-linear contribution is smaller than ϵNL, the signal amplitude should be chosen as \(\eta={{{{{{{\mathcal{O}}}}}}}}({\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}/| | {{{{{{{\bf{B}}}}}}}}| {| }^{2}| | {{{{{{{\bf{A}}}}}}}}| | )\), where ∣∣.∣∣ is the spectral norm. We further show that 1st order Trotter error is \({{{{{{{\mathcal{O}}}}}}}}({\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}{\alpha }_{{{{{{{{\rm{comm}}}}}}}}}^{1}({{{{{{{\bf{B}}}}}}}})/| | {{{{{{{\bf{B}}}}}}}}| | r)\) where r is the number of Trotter steps used to apply eiηB, and \({\alpha }_{{{{{{{{\rm{comm}}}}}}}}}^{1}\) is defined in ref. 47 Theorem 1. Finally, the statistical error of measurement is \({{{{{{{\mathcal{O}}}}}}}}(| | {{{{{{{\bf{A}}}}}}}}| {| }^{2}| | {{{{{{{\bf{B}}}}}}}}| {| }^{2}/{\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}\sqrt{{N}_{{{{{{{{\rm{shot}}}}}}}}}})\), where Nshot is the sample size, or number of shots.

If the operators A and B are linear combinations of Poly(n) Pauli strings where n is the number of qubits or the system size, and assuming the coefficients do not depend on n, we find that the spectral norms ∣∣A∣∣, ∣∣B∣∣ and the commutative norm \({\alpha }_{{{{{{{{\rm{comm}}}}}}}}}^{1}({{{{{{{\bf{B}}}}}}}})\) scale polynomially with the system size, which lead to a requirement of \({N}_{{{{{{{{\rm{shot}}}}}}}}}={{{{{{{\mathcal{O}}}}}}}}({{{{{{{\rm{Poly(n)}}}}}}}}/{\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}^{2}{\epsilon }_{{{{{{{{\rm{meas}}}}}}}}}^{2})\) number of shots to keep the measurement error less than ϵmeas. Thus, the method is scalable for various cases including momentum definite response functions.

For many response functions the operators A and B are intensive quantities, and thus are normalized accordingly with the system size. In turn, their operator norm and hence the response function’s maximum possible value is 1. When \({\alpha }_{{{{{{{{\rm{comm}}}}}}}}}^{1}({{{{{{{\bf{B}}}}}}}})=0\) the Poly(n) term vanishes, and \({N}_{{{{{{{{\rm{shot}}}}}}}}}={{{{{{{\mathcal{O}}}}}}}}(1/{\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}^{2}{\epsilon }_{{{{{{{{\rm{meas}}}}}}}}}^{2})\), completely independent of the system size. For spin systems where excitations of the response function are linear combinations of commuting local variables, this leads to a better result compared to the Hadamard test, which has a quadratically scaling number of shots with the system size.

However for response functions such as momentum-definite single particle fermion Green’s functions, because none of the Pauli strings in B commute with each other, \({\alpha }_{{{{{{{{\rm{comm}}}}}}}}}^{1}({{{{{{{\bf{B}}}}}}}})={{{{{{{\mathcal{O}}}}}}}}(n)\), and \({\epsilon }_{{{{{{{{\rm{NL}}}}}}}}}={{{{{{{\mathcal{O}}}}}}}}(r/n)\). In Supplementary Note 7, and as discussed in ref. 48, we observe that the theoretical error bounds are loose and lead to higher resource estimates than required. As such, even r = 1 might be sufficient to achieve good results, although the theoretically estimated number of shots can also be made independent of the system size by choosing the number of Trotter steps r = n.

Green’s function of the SSH model

We demonstrate the linear response approach by calculating the fermionic Green’s function as would be measured by ARPES (angle-resolved photoemission spectroscopy). We study a minimal model for a charge density wave known as the Su–Schrieffer–Heeger (SSH) model — an N-site 1D spinless free fermionic chain with nearest-neighbor bond-dependent hoppings (see Fig. 3a) — in the limit where the lattice distortion is static,

For finite δ this model exhibits a charge density wave, with a gap proportional to δ.

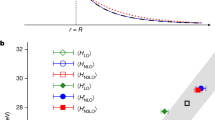

a Lattice and hopping structure of the SSH model. b Compressed linear response method quantum circuit run on ibm_auckland (For system and calibration details, see Supplementary Note 5). XY indicates a rotation about XX followed by YY, or to be more explicit, it is \(\exp (i\theta (XX+YY))=\exp (i\theta XX)\exp (i\theta YY)\). We find the angles of each gate by the algebraic compression method given in50,51. c Fermionic correlation function \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(t)=2\;{{{\rm{Re}}}}\;{G}_{k}(t)\) for δ = 0 using the commutator method. Data for other values of δ are available in the Supplementary Note 1. d Normalized power spectrum \(| {{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega ){| }^{2}\). e–g Normalized false-color plots of \(| {{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega ){| }^{2}\) for δ = {0, 0.4, 0.8}. Green dashed lines indicate the expected bounds of the gap, and the red lines the analytically obtained spectrum. h, i Normalized false-color plot of post-selected \(\langle {\Phi }_{0}^{y}| {\Phi }_{0}^{y}\rangle\) and \(\langle {\Phi }_{1}^{y}| {\Phi }_{1}^{y}\rangle\), respectively (see text for definition). The projected norms contain the same spectral information as \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega )\).

To reduce our quantum computing resource needs, we apply a number of simplifications particular to this example. First, we address the issue of ground state preparation. Normally to calculate the single particle Green’s function for this model, one should first generate the ground state of the model. There are numerous methods to generate the ground state or an approximate ground state for a given model34. The Green’s function then can be calculated by our linear response method. Since our purpose here is to demonstrate our algorithm, we adjust the chemical potential to μ = −5 so that the ground state is the trivial no particle state. To be clear, our method can calculate the response function on any state. But for that response function to be a Green’s function, the state must be the ground state.

The second simplification is made in the operators we measure and apply, i.e. A and B. We use a momentum-selective instantaneous (and thus broadband) driving field coupled to the particle creation and annihilation operators that act on all the sites i,

with a pulse h(t) = ηδ(t), where we used η = 0.04. Because the ground state contains no particles, this operator is equivalent to \({{{{{{{\bf{B}}}}}}}}\equiv {\sum }_{i}\cos (k{r}_{i}){X}_{i}\), which is much simpler to implement, because all Pauli strings within it are 1-qubit operators, and commute with each other, therefore avoiding Trotter error. We measure \({{{{{{{\bf{A}}}}}}}}={X}_{0}={c}_{0}+{c}_{0}^{{{{\dagger}}} }\) which is local in position, and includes all momentum modes. Due to momentum conservation, measuring any \({\widetilde{X}}_{i}\) would lead to the same result, but we choose \({{{{{{{\bf{A}}}}}}}}={\widetilde{X}}_{0}={X}_{0}\) to minimize the weight of the measured Pauli string (and thus reduce measurement noise49). By measuring X0 we obtain

In the frequency basis, this becomes (see Supplementary Note 4 for details)

In general, to measure Gk(ω), one should isolate the spectrum of \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega )\) from Gk(−ω)*, which requires measuring A = Y0 as well. However, since μ = −5, for this model the single particle energies are manifestly positive, and the interference between Gk(ω) and Gk( − ω) is negligible. Thus, \(| {{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega ){| }^{2}\) tracks the quasi-particle peaks in \({{{{{{{\rm{Im}}}}}}}}\;{G}_{k}(\omega )\), and measuring \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(\omega )\) is sufficient to obtain the single-particle spectrum.

We performed the calculation on the 27-qubit ibm_auckland superconducting quantum computer (for system and calibration details, see Supplementary Note 5) for an N = 8-site chain, which has allowed momentum values \(k=\frac{2\pi }{N}j,\, j\in \left\{0\ldots 7\right\}\). Since the driving field B is symmetric in k, both k and − k are obtained at the same time. We used a compressed free fermionic evolution, which is discussed in detail in refs. 50,51 and further simplified into the circuit in Fig. 3b (see Supplementary Note 4 for details). Figure 3c shows the raw data for \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(t)\) with δ = 0 at each unique k; the data was obtained from ibm_auckland via the parity operator method. The power spectrum is shown in Fig. 3d–e. While the data from the quantum computer appears quite noisy, in the frequency regime of interest there is only a single peak present in the Fourier transform, illustrating the remarkable strength of a momentum-selective probe, which picks out the single energy at each momentum, together with Fourier filtering. Upon increasing δ (Fig. 3f, g), a gap opens up in the spectrum (time traces and Fourier power spectra are available in the supplementary material). The spectrum for δ = 0.4 is noisier than the other two, which we attribute to machine noise from those particular measurements. In panels h and i, we plot the norms of 0- and 1- particle components of the state right before the measurement, i.e. \(\langle {\Phi }_{0}^{y}| {\Phi }_{0}^{y}\rangle\) and \(\langle {\Phi }_{1}^{y}| {\Phi }_{1}^{y}\rangle\), where \(\left\vert {\Phi }_{M}^{y}\right\rangle\) is defined above Eq. (15). Both methods faithfully reproduce the power spectrum, with slightly higher levels of noise for post-selection on N = 1.

For each k and δ shown in Fig. 3 we collected 3 data sets with 8000 shots each, yielding 24,000 shots total per curve. We did not use read out error mitigation, however we incorporated dynamical decoupling and Pauli twirling as implemented in the qiskit_research package52. The raw and calibration data are shown in the supplementary material.

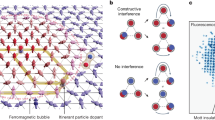

In order to further underscore the power of the momentum-selective linear response approach, we compare its effectiveness to a position-selective linear response and Hadamard test methods in Fig. 4 on a noisy simulator with one/two qubit noise of 1% and 10%, respectively. Compared to the momentum-selective linear response method, the position-selective one is noisier, but without particular structure. The Hadamard test, on the other hand, exhibits streaks that arise from leakage of signal from one momentum to the others. There are two key reasons for the differences seen in the figure. First, both position-selective and Hadamard test methods involve excitations at each position (Xi in the figure). These must be combined in the post-processing with a Fourier transform. But, because a Fourier transform relies on constructive/destructive interference between signals, and we are performing this on noisy data, the interference is not perfect, which leads to leakage between momentum channels. When the circuits to be run for each Xi are not identical in structure, the noise becomes dependent on i. This also leads to a momentum-dependent noise term fk, which in turn appears in the momentum space signal as

which we derive in the Supplementary Note 2. Second, the Hadamard test method introduces more of the same problem because each Xi is a separate circuit — in addition to needing more circuits to be run and an additional ancilla. This further exacerbates the issue with the Fourier analysis. The momentum-selectivity avoids these issues by making a unique excitation and thus producing a response function with a single large contribution.

The circuit diagrams schematically represent the 3 approaches: a linear response with momentum selectivity, b linear response in position space and c Hadamard test in position space. The simulations were run on a noisy simulators with one/two qubit noise of 1% and 10%, respectively. While the momentum selective linear response method directly yields \({{{{{{{{\mathcal{L}}}}}}}}}_{k}(t)\), an additional spatial Fourier transformation is needed for the other two methods.

Green’s function of the 1D Hubbard model

The method is equally applicable to interacting models that are not simply integrable; here we demonstrate this by calculating the Green’s function of a periodic 1D Hubbard model with N = 6 sites (12 spin-orbitals). The Hamiltonian for the model is

where \({n}_{i,\sigma }={c}_{i,\sigma }^{{{{\dagger}}} }{c}_{i,\sigma }\). The first term in the Hamiltonian is the nearest-neighbor hopping between sites, the second term governs the on-site electron-electron interactions with strength U, and the last term sets the chemical potential for the model. We set μ = 0, so the ground state of the system is half-filled. The calculations were performed using a statevector simulator, with the ground state prepared via exact diagonalization. We obtained the retarded Green’s function via Eq. (10) for different interaction strengths U using a momentum-selective driving field (c.f. Eq. (21)) and a Gaussian temporal profile. The results are shown in Fig. 5 for various values of U. With increasing interaction strength, a gap opens in the spectrum, satellite peaks appear, and a reduction in the weights of the sub-bands occurs, all in agreement with the known results for this model53,54.

Polarizability of the SSH model

We next consider the polarizability χ(q, ω) of the 1D chain. The polarizability is the response of the electronic system to an applied potential. It plays a critical role in the screening of interactions between electrons in solids and molecules, and in their electromagnetic properties. Experimentally, the polarizability can be studied by light absorption or scattering, or by momentum-resolved electron energy loss spectroscopy (M-EELS). The polarizability is defined by

i.e. it is a charge-charge correlation function. Here δn is the change in the charge from the equilibrium density. The observable A is the charge, and the applied field B (which is coupled to the charge) is a potential. The excitations are changes in the density, which are composed of pairs of fermionic operators, and thus this is a bosonic correlation function.

For this demonstration, B acts on a single site, and we classically simulate a partially filled 24-site chain (μ = 0.9, which was chosen to reveal the salient features of a 1D system). As discussed above, one of the advantages of the linear response framework is that all 24 correlation functions are obtained with a single calculation. Figure 6a shows \({{{{{{{\rm{Im}}}}}}}}\,\chi (q,\omega )\), which is the double Fourier transform of χ(r, t) obtained from driving a single site with a sharp h(t). \({{{{{{{\rm{Im}}}}}}}}\,\chi (q,\omega )\) has all the textbook features of the response of a 1D charged system55; there is no response at all at q = 0 due to charge conservation, there is a narrow dispersive feature at low q, ω that broadens with increasing q, and a low-energy turnover with a minimum at 2kF.

Both panels show \({{{{{{{\rm{Im}}}}}}}}\,\chi (q,\omega )\) in false color. The insets show the driving field h(t) and its Fourier transform. a χ(q, ω) obtained from the response due to a sharp excitation with height 0.1. b χ(q, ω) obtained from the response of a frequency selective field. The dashed lines indicate the range where ∣h(ω)∣2 < 10−3. Here, we used h(t) as sinusoid with a Gaussian profile of width σ = 0.625, height 0.05, and centered at ω = 1.5.

Since h(ω) has support across the entire spectrum of χ(q, ω) (shown in the inset), the entire spectrum can be obtained from this measurement. This is in contrast to panel b, where we drive with a short-duration sinusoid centered at ω = 1.5. This excitation is frequency-selective; that is, it only excites the system at frequencies where h(ω) has finite support. This range of frequencies is indicated by dashed lines in the figure. With our particular choice of h(t), we are able to observe some of the middle range of excitations, but are insensitive to the lower frequencies and the top of the spectrum. Note that there is no restriction on the Fourier transform of χ(r, t) per se; rather, the need to divide by h(ω) (see Eq. (4)) limits the applicable window to the ranges where h(ω) is sufficiently far from zero.

Discussion

The linear-response based formalism is a shift in perspective on quantum simulation where the experiment itself is simulated. This is in contrast to Hadamard test based approaches, where the system is simulated and the desired observables are extracted either outside of the system qubits and/or from a large excitation. This shift in perspective and methodology enables a much broader set of observables to be envisioned and easily calculated without additional post-processing. And, unlike variational methods for computing response functions18,19,20,21,22, it relies almost entirely on time evolution, a task for which quantum computers are well-suited.

The linear response method enables efficiently obtaining a number of dynamical properties. The fact that it is efficient is important because we expect quantum computation to remain costly for quite some time – this is true on today’s NISQ hardware, and will also be true for early fault tolerant quantum computing. Thus, if the properties can be obtained in as few circuits as possible, and with a higher tolerance for error, this is beneficial. Our proposed algorithm achieves precisely this.

This shift in perspective and the resulting implementation leads to several clear advantages:

-

1.

Efficiency is achieved by enabling the measurement of many correlation functions at the same time (see also Gustafson et al.35). For example, a local excitation can provide a researcher access to χ(r, t) given in (26) for all values of r because all nr can be measured simultaneously.

-

2.

Additional efficiency and noise resiliance are achieved by enabling the excitation/measurement to be any Hermitian operator, potentially obviating the need to run many circuits and having to post-process the data from multiple different circuits, each with its own noise characteristic.

-

3.

Being able to make tailored excitations means that researchers can pinpoint the regime they are interested in and study precisely the excitations of interest, within a single circuit. This is an improvement over rather than having to extract the signal of interest out of the full system response (plus the noise), or having to rely on cancellation between the (noisy) results obtained from several circuit runs.

-

4.

Fault tolerant quantum computing comes at a significant qubit overhead cost. An algorithm that has a high tolerance for error and requires 1 fewer qubit will enable earlier hardware calculation of response functions.

Fermionic response functions (anti-correlation functions) can be obtained with the same experimentally centered, linear response perspective; this is unlike other ancilla-free methods35,36 which are limited to bosonic response functions. The post-selection method is intuitive, as the particle number sectors are clearly delineated. On the other hand, the auxiliary operator method is an unusual perspective; it is sufficient to measure almost the same operator as for the bosonic correlation function. The electron Green’s function, for example, is obtained simply by keeping track of the parity as well as the occupation number measurement. In either case, this is an important advance since electron Green’s functions play a key role in physics; as an important measurement per se56, and as an ingredient in embedding theories such as dynamical mean field theory3,4,5,6,7,8.

While here we have explicitly demonstrated the linear response approach in the context of a charge density wave, it is a general method to obtain response functions, and is not limited to electronic Hamiltonians. It can be applied to spin or bosonic models, or other models from fields where quantum simulation plays a role, including chemistry and high energy physics. Different choices of A and B extend the method to a wide variety of observables. For example, the conductivity is a current-current correlation function, for which h(t) is an applied electric field. A zz-spin susceptibility can be obtained with h(t) as a z-axis magnetic field, and the operators A = B = Sz. Moving forward, the functional derivative formalism can be extended to higher order derivatives that involve multiple driving fields. One notable application is resonant inelasic X-ray scattering (RIXS), which is a four-point correlation function57, which is very challenging to calculate via diagrammatics. In addition, and aside from direct experimental probes, pairing vertices in superconductors and other ordered phenomena also fall into this class of observables. We reserve these discussions for future work.

Data availability

All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. The data for the Figs. 3, 4, 5 and 6 are available at https://doi.org/10.5061/dryad.51c59zwcn.

Code availability

Code is available from the corresponding author upon request.

References

Mahan, G. D., Many Particle Physics (Springer, 2010).

Stefanucci, G. & van Leeuwen, R., Nonequilibrium Many-Body Theory of Quantum Systems: A Modern Introduction, 1st ed. (Cambridge University Press, 2013).

Georges, A., Kotliar, G., Krauth, W. & Rozenberg, M. J. Dynamical mean-field theory of strongly correlated fermion systems and the limit of infinite dimensions. Rev. Modern Phys. 68, 13 (1996).

Zgid, D. & Chan, G. K.-L. Dynamical mean-field theory from a quantum chemical perspective. J. Chem. Phys. 134, 094115 (2011).

Rungger, I. et al. Dynamical mean field theory algorithm and experiment on quantum computers. Preprint at https://arxiv.org/abs/1910.04735 (2020).

Keen, T., Maier, T., Johnston, S. & Lougovski, P. Quantum-classical simulation of two-site dynamical mean-field theory on noisy quantum hardware. Quantum Sci. Technol. 5, 035001 (2020).

Steckmann, T. et al. Mapping the metal-insulator phase diagram by algebraically fast-forwarding dynamics on a cloud quantum computer. Phys. Rev. Res. 5, 023198 (2023).

Jamet, F., Agarwal, A., & Rungger, I. Quantum subspace expansion algorithm for Green’s functions. Preprint at https://arxiv.org/abs/2205.00094(2022).

Somma, R., Ortiz, G., Gubernatis, J. E., Knill, E. & Laflamme, R. Simulating physical phenomena by quantum networks. Phys. Rev. A 65, 042323 (2002).

Chiesa, A. et al. Quantum hardware simulating four-dimensional inelastic neutron scattering. Nat. Phys. 15, 455 (2019).

Roggero, A. & Carlson, J. Dynamic linear response quantum algorithm. Phys. Rev. C 100, 034610 (2019).

Francis, A., Freericks, J. K. & Kemper, A. F. Quantum computation of magnon spectra. Phys. Rev. B 101, 014411 (2020).

Kosugi, T. & Matsushita, Y. Construction of Green’s functions on a quantum computer: Quasiparticle spectra of molecules. Phys. Rev. A 101, 1 (2020).

Kosugi, T. & Matsushita, Y. Linear-response functions of molecules on a quantum computer: Charge and spin responses and optical absorption. Phys. Rev. Res. 2, 033043 (2020).

Endo, S., Kurata, I. & Nakagawa, Y. O. Calculation of the Green’s function on near-term quantum computers. Phys. Rev. Res. 2, 033281 (2020).

Libbi, F., Rizzo, J., Tacchino, F., Marzari, N. & Tavernelli, I. Effective calculation of the Green’s function in the time domain on near-term quantum processors. Phys. Rev. Res. 4, 043038 (2022).

Bruus, H. & Flensberg, K. Many-body quantum theory in condensed matter physics: an introduction (Oxford University Press, Oxford, 2004).

Chen, H. et al. Variational quantum eigensolver for dynamic correlation functions. Phys. Rev. A 104, 032405 (2021).

Gyawali, G. & Lawler, M. J. Adaptive variational preparation of the Fermi-Hubbard eigenstates. Phys. Rev. A 105, 012413 (2022).

Lee, C. K., Zhang, S.-X., Hsieh, C.-Y., Zhang, S., & Shi, L. Variational quantum simulations of finite-temperature dynamical properties via thermofield dynamics. Preprint at https://arxiv.org/abs/2206.05571 (2022).

Jensen, P. W. K., Johnson, P. D. & Kunitsa, A. A. Near-term quantum algorithm for computing molecular and materials properties based on recursive variational series methods. Phys. Rev. A 108, 022422 (2023).

Huang, K. et al. Variational quantum computation of molecular linear response properties on a superconducting quantum processor. J. Phys. Chem. Lett. 13, 9114 (2022).

Ciavarella, A. Algorithm for quantum computation of particle decays. Phys. Rev. D 102, 094505 (2020).

Roggero, A. Spectral-density estimation with the Gaussian integral transform. Phys. Rev. A 102, 022409 (2020).

Keen, T., Dumitrescu, E., and Wang, Y. Quantum algorithms for ground-state preparation and Green’s function calculation. Preprint at https://arxiv.org/abs/2112.05731 (2021).

Tong, Y., An, D., Wiebe, N. & Lin, L. Fast inversion, preconditioned quantum linear system solvers, fast Green’s-function computation, and fast evaluation of matrix functions. Phys. Rev. A 104, 032422 (2021).

Su, W. P., Schrieffer, J. R. & Heeger, A. J. Solitons in polyacetylene. Phys. Rev. Lett. 42, 1698 (1979).

Eckstein, M. & Kollar, M. Theory of time-resolved optical spectroscopy on correlated electron systems. Phys. Rev. B 78, 205119 (2008).

Kumar, A. & Kemper, A. Higgs oscillations in time-resolved optical conductivity. Phys. Rev. B 100, 174515 (2019).

Zawadzki, K., Yang, L. & Feiguin, A. E. Time-dependent approach to inelastic scattering spectroscopies in and away from equilibrium: Beyond perturbation theory. Phys. Rev. B 102, 235141 (2020).

Zawadzki, K., Nocera, A. & Feiguin, A. A time-dependent momentum-resolved scattering approach to core-level spectroscopies. SciPost Phys. 15, 166 (2023).

Gilyén, A., Su, Y., Low, G. H., & Wiebe, N. Quantum singular value transformation and beyond: exponential improvements for quantum matrix arithmetics. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing 193–204 (ACM, 2019).

Berry, D. W., Childs, A. M., Cleve, R., Kothari, R., and Somma, R. D. Exponential improvement in precision for simulating sparse Hamiltonians. In Proceedings of the forty-sixth annual ACM symposium on Theory of computing, 283–292 (ACM, 2014).

Dalzell, A. M. et al. Quantum algorithms: A survey of applications and end-to-end complexities. Preprint at https://arxiv.org/abs/2310.03011 (2023).

Gustafson, E. et al. Large scale multi-node simulations of \({\mathbb{Z}}\)2 gauge theory quantum circuits using google cloud platform. In IEEE/ACM Second International Workshop on Quantum Computing Software (QCS) 72–79 (IEEE, 2021).

Uhrich, P., Castrignano, S., Uys, H. & Kastner, M. Noninvasive measurement of dynamic correlation functions. Phys. Rev. A 96, 022127 (2017).

White, S. R. Minimally entangled typical quantum states at finite temperature. Phys. Rev. Lett. 102, 190601 (2009).

Verdon, G., Marks, J., Nanda, S., Leichenauer, S. & Hidary, J. Quantum Hamiltonian-based models and the variational quantum thermalizer algorithm. Preprint at https://arxiv.org/abs/1910.02071 (2019).

Cohn, J., Yang, F., Najafi, K., Jones, B. & Freericks, J. K. Minimal effective Gibbs ansatz: A simple protocol for extracting an accurate thermal representation for quantum simulation. Phys. Rev. A 102, 022622 (2020).

Poulin, D. & Wocjan, P. Sampling from the thermal quantum Gibbs state and evaluating partition functions with a quantum computer. Phys. Rev. Lett. 103, 220502 (2009).

Motta, M. et al. Determining eigenstates and thermal states on a quantum computer using quantum imaginary time evolution. Nat. Phys. 16, 205 (2020).

Metcalf, M., Moussa, J. E., de Jong, W. A. & Sarovar, M. Engineered thermalization and cooling of quantum many-body systems. Phys. Rev. Res. 2, 023214 (2020).

Polla, S., Herasymenko, Y. & O’Brien, T. E. Quantum digital cooling. Phys. Rev. A 104, 012414 (2021).

Zhang, D.-B., Zhang, G.-Q., Xue, Z.-Y., Zhu, S.-L. & Wang, Z. D. Continuous-variable assisted thermal quantum simulation. Phys. Rev. Lett. 127, 020502 (2021).

Metcalf, M. et al. Quantum Markov chain Monte Carlo with digital dissipative dynamics on quantum computers. Quantum Sci. Technol. 7, 025017 (2022).

Jordan, P. & Wigner, E. P. Über das Paulische äquivalenzverbot. In The Collected Works of Eugene Paul Wigner 109–129 (Springer, 1993).

Childs, A. M., Su, Y., Tran, M. C., Wiebe, N. & Zhu, S. Theory of Trotter error with commutator scaling. Phys. Rev. X 11, 011020 (2021).

Şahinoğlu, B. & Somma, R. D. Hamiltonian simulation in the low-energy subspace. npj Quantum Inf. 7, 119 (2021).

Kim, Y. et al. Scalable error mitigation for noisy quantum circuits produces competitive expectation values. Nat. Phys. 19, 752–759 (2023).

Kökcü, E. et al. Algebraic compression of quantum circuits for Hamiltonian evolution. Phys. Rev. A 105, 032420 (2022).

Camps, D. et al. An algebraic quantum circuit compression algorithm for hamiltonian simulation. SIAM J. Matrix Anal. Appl. 43, 1084 (2022).

Qiskit Contributors. Qiskit: An open-source framework for quantum computing, https://doi.org/10.5281/zenodo.2573505 (2023).

Freericks, J., Cohn, J., van Dongen, P. & Krishnamurthy, H. Infinite single-particle bandwidth of a Mott–Hubbard insulator. Int. J. Modern Phys. B 30, 1642001 (2016).

Sherman, A. Evolution of the spectrum of the Hubbard model with filling. Preprint at https://arxiv.org/abs/cond-mat/0602537 (2006).

Mihaila, B. Lindhard function of a d-dimensional Fermi gas. Preprint at https://arxiv.org/abs/1111.5337 (2011).

Damascelli, A., Hussain, Z. & Shen, Z.-X. Angle-resolved photoemission studies of the cuprate superconductors. Rev. Modern Phys. 75, 473 (2003).

Ament, L. J., Van Veenendaal, M., Devereaux, T. P., Hill, J. P. & Van Den Brink, J. Resonant inelastic x-ray scattering studies of elementary excitations. Rev. Modern Phys. 83, 705 (2011).

Acknowledgements

We acknowledge helpful discussions with Erik Gustafson and Vito Scarola. We acknowledge the use of IBM Q via the IBM Q Hub at North Carolina (NC) State for this paper. The views expressed are those of the authors and do not reflect the official policy or position of the IBM Q Hub at NC State, IBM or the IBM Q team. We acknowledge the use of the QISKIT software package52 for performing the quantum simulations. This work was supported by the Department of Energy, Office of Basic Energy Sciences, Division of Materials Sciences and Engineering under grant no. DE-SC0023231. J.K.F. was also supported by the McDevitt bequest at Georgetown.

Author information

Authors and Affiliations

Contributions

A.F.K. and J.K.F. conceptualized the project. A.F.K. developed the methodology, performed the quantum computer experiments, and ran the polarizability calculations. E.K. contributed to the mathematical development for fermionic response functions, error bound calculations and designed the quantum circuits. H.A.L. ran the noisy quantum simulator and Hubbard model calculations. All authors discussed the results and contributed to the development of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Yongxin Yao, and the other, anonymous, reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kökcü, E., Labib, H.A., Freericks, J.K. et al. A linear response framework for quantum simulation of bosonic and fermionic correlation functions. Nat Commun 15, 3881 (2024). https://doi.org/10.1038/s41467-024-47729-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-47729-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.